One of the things I like about data journalism (despite being very frustrating at times) is that it is very often an exercise in problem solving; it makes me think.

Most of the problems that need solving are how to avoid doing something very tedious and time-consuming.

I’ve been working with the Land Registry price paid data recently. At first glance, the data seems fairly straightforward, if very big. Each CSV file for a month contains about 50 to 60,000 lines of data, so there are only a few places that will open more than one file, such as more recent versions of Excel

However, each monthly update contains changes to some of the previous data, either data to be deleted or changed. In order to avoid having duplicates of rows or data you shouldn’t have, the data needs tidying up.

First download all of the data you want and piece all the sheets back to together in one sheet. To keep the data somewhat manageable, I’ve only gone back a year. You may need to add in column headers (see the FAQ) to make keeping track of your data easier.

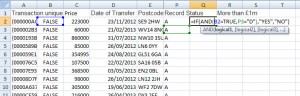

First, sort the record status column, so that all of the data will be in the same order, As then Cs then Ds, when you sort by transaction ID. Sort by transaction ID, this will ensure all the rows are ordered so that if you have more than one row with the same ID, they will now be next to each other.

Now, add a column next to the transaction ID and enter the formula =A2=A3, this will compare one transaction ID to the one below it and will help you identify duplicates. I then copied that column and used the Paste Values option to get rid of the formulas.

I was then going to use Find (CTRL + F) to search for rows where the comparison was TRUE and then delete rows as necessary.

Why not just sort the comparison column and delete all the ones marked TRUE? Because some are rows that need to be deleted but some are rows that need to be replaced with a newer addition, when you sort, you can’t tell which is which.

By about row 1,200 (of 753,000), I realised this was not going to be an efficient method of cleaning the data. And if something feels like it is going to be a very tedious method of achieving something that is good reason to find a better way.

As I was working through the rows, I realised two things, because of the earlier sort, the rows with record status A always came first in the pairs of duplicates and the ones I wanted identify in order to delete were the ones where the record status was A above but the row below was D (you already know you want to delete all the rows where record status is D, they don’t need more identification).

So it would be possible to write a formula to identify these rows.

Add a new column at the end of your data and add the formula =IF(AND(B2=TRUE, P3=”D”),”YES”,”NO”)

The formula will put a YES in the column if the row fits the criteria of being a duplicate where the matching row (the one below) is marked for deletion. Otherwise the formula will return NO.

Why are some words in quotes and not others? Text, otherwise known as strings, needs to be in quotes so Excel can recognise it. The TRUE (or FALSE) are not text, they’re Boolean values – items in this column can only have one of two values (true or false) and Excel recognises this automatically, so no need for the quotes.

Once you’ve copied the formula down, you can do a copy and paste values to get rid of the formulas. Next sort this column to gather all the rows identified as YES together so you can delete them. You can then delete all the rows with the record status D.

Repeat with the rows to be changed, replacing D with C in your formula. This time you will want to delete the rows identified by your formula but leave the Change rows which are the updates to the data you’ve just deleted.

A much quicker way to clean your data and stop duplicates making your calculations inaccurate.

There are probably still better ways to clean up the data, but it is amazing how much you can achieve with Excel formulas and a bit of thought.

For more tips and ideas on working with data, sign up to the mailing list to get updates on the second edition of Getting Started with Data Journalism.